Website & Server Optimization

Even though Google harps on about high quality content, there are other aspects of your website and hosting environment which can contribute to the rankings of your website in search engines. Some of what we will cover below is easy (but can be tricky) to implement, and other issues may require revisiting the entire design and architecture of your website.

Load Time

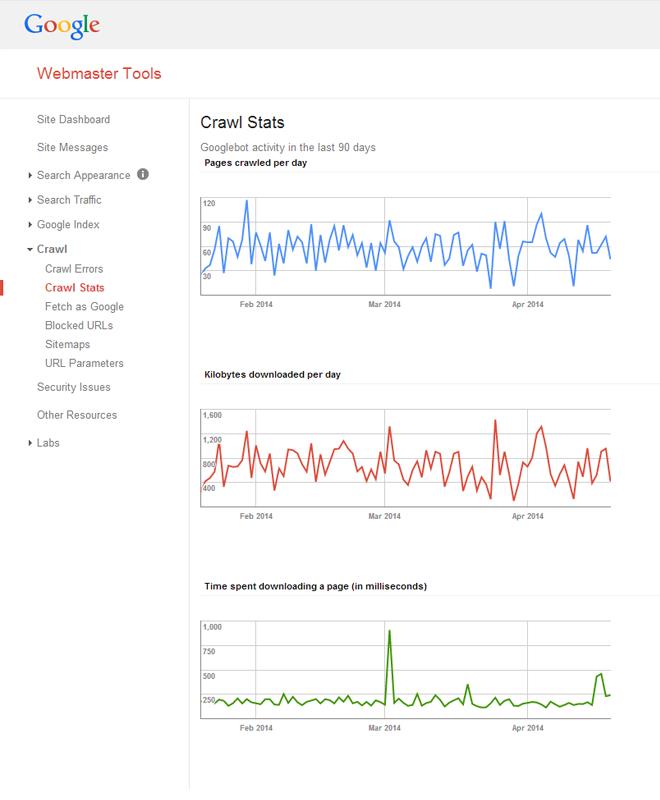

Load time refers to the amount of time it takes for your server/webhost to serve a requested page from your website by a user or search engine spider. Generally, the load time is in milliseconds; however, if your server is not able to serve pages quickly enough it will cause problems, both with rankings and bounce rate (people leaving a site after looking at only one page--in this case assuming they even wait long enough for your page to load). So it is important to test how quickly your hosting service is able to serve up your web pages. Keep in mind that the problem may not be your host but the way your site is set up. You can use a multitude of tools to check the speed with which the pages of your site load, or utilize the Google Webmaster Tools to find out how quickly Google is able to load your website's pages. You will find this information at Webmaster Tools > Crawl > Crawl Stats. You can also use Google's PageSpeed Insights developer tool to pinpoint some of the issues which may be causing the slowness of your site.

Once you have checked to see how quickly your web pages load, you will have to use that information to figure out your next step. If the pages are loading in a reasonable amount of time (less than one second per page), then you do not have much to do, unless you would like to improve your site's speed even more. However, if your pages are taking a few seconds to load, then you certainly have some work to do to get the load time down to below one second per page. Here are some of the things you can do to improve load time:

- Minimize HTTP requests

- Externalize your CSS and JavaScript code as much as possible

- Optimize your images so you are not using unnecessarily large image files without gaining in visual quality

- Use server side caching & Gzip to reduce render time and file size

- Reduce 301 redirects

- If possible, use a content delivery network

robots.txt

The robots.txt, which is a creation of the Robots Exclusion Protocol, is a file stored in a website's root directory (.e.g., example.com/robots.txt), and is used to provide crawl instructions to automated web crawlers (including search engine spiders) which visit your website.

The robots.txt file is used by webmasters to instruct crawlers which parts of their site they would like to disallow from crawls. They also set crawl-delay parameters, and point out the location of the sitemap file(s).

404 Pages

Users are redirected to a 404 page when they have tried to locate or reach a page that longer exists, often because they have either clicked a broken link or mistyped the URL.

By default, a 404 page is just an empty page (save for a small notice stating that the page that has been requested could not be found), which usually means that the user (and search engine spiders) will simply turn back and leave the site, and this is certainly not something you want happening. To solve this issue, and avoid 301 redirecting all error pages to your home page or some other destination on your website, you can utilize a custom 404 page which gives the users (or search engine spiders) links and navigational elements to follow into your site. A custom 404 page could simply be smaller version of your website's sitemap which directs the user or search engine spider to the main sections of your site.

Of course, not every 404 page should be redirected somewhere, and that is where a custom 404 page would come into play. These 404 pages can be shown by default, but creating a customized 404 page is highly recommended.

Custom 404 Error Page

A 404 error is what gets reported to browsers or crawlers when a URL (webpage) that has been requested does not exist. Having too many 404 errors can be detrimental to organic search engine rankings. Depending on the URL which is producing the 404 error, it might be helpful to 301 redirect the non-existent URL to an appropriate location. For example, if someone has linked to your widgets page (www.example.com/widget.html), but has mispelled the URL (www.example.com/wigdet.html), and that link is sending you a lot of clicks, then it would be a good idea to 301 redirect .../wigdet.html to .../widget.html, so that you are not serving an unnecessary number of 404 page, and also capture some of the link value from the link.

When a user manages to stumble across a 404 error, the default 404 page does very little to help guide the user along to a useful section on the website--the only available option is to click the back button. Without a place to move forward, or even a link to the homepage, there is no way to help users find what they are looking for.

A default custom 404 page is equally unhelpful for search engine spiders. These spiders follow a specific formula while indexing websites: they follow every available link, effectively creating a web or network of information. When a spider runs into a default 404 page, like their human counterparts, they are unable to move forward. Unlike human users, however, spiders are not able to execute complex actions, like hitting the back button. When a spider ends up on these pages, it is unable to proceed with indexing your website. A custom 404 page gives spiders something to follow even if they run into an error.

When creating and designing a customized 404 page there are a number of important things to keep in mind. The most important factor of an error page is to clearly inform a user that the page they were looking for has not been found, and apologize.

Offer a way for users to get back on track, a way to contact the webmaster about the problem and include a link to the homepage. Remember to use meaningful anchor text, however, simply writing "Home" is not recommended. Also consider including additional links to popular pages or articles on the website. Finally, remember to ensure that your custom 404 error page passes on a 404 error code to search engines to prevent them from indexing it.

Redirects

A redirect is the action which forwards one URL to another. For example, if a particular page on your site has had a file name change (e.g., from www.example.com/widgets.html to www.example.com/affordable-widgets.html), then it would be necessary to redirect the old URL to the new URL. There are two types of redirects which we will cover here--the 301 redirect, and the 302 redirect.

302 Redirect

A 302 redirect is a temporary redirect which passes no link value, and is something to be avoided for SEO purposes. This redirect tells the search engine spiders that the page that was requested has moved temporarily, but will be made available again in the future; for this reason, the search engine spiders/algorithm do not allow link value to flow through a 302 redirect.

301 Redirect

A 301 redirect is a permanent redirect which passes most of the link value from the old to the new page, and is the recommended method of redirecting old/changed URLs to their new destination.